A better way to organize ui rendering?

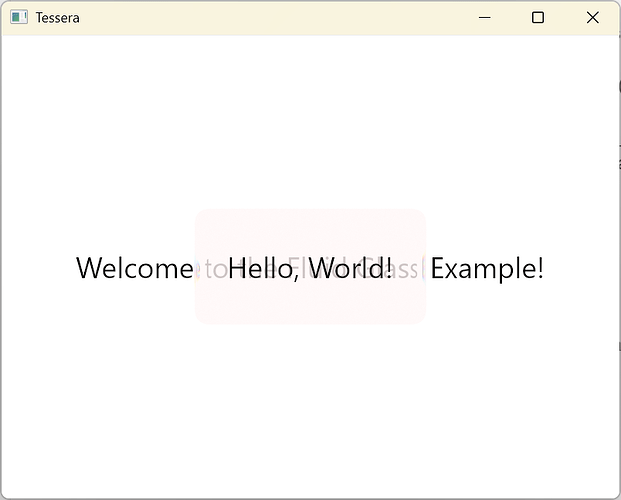

⚓ Rust 📅 2025-07-13 👤 surdeus 👁️ 22I previously posted about my imgui framework, tessera here, github repo here. Recently, I've been refactoring its rendering organization (including compute and render pipelines), and I feel it's not quite satisfactory. Do you have any better ideas?

Current Architecture:

First, let's talk about performance issues.

In the current implementation, every component that requires a background blur/post-processing effect (like fluid_glass) independently pushes a series of compute commands (e.g., two BlurCommands for a Gaussian blur) and a draw command with a barrier (BarrierRequirement::SampleBackground). This leads to performance issues such as:

Frequent Texture Copies: The SampleBackground barrier triggers an expensive texture copy operation (copying the current render result from the render target to a sampleable texture) before each component that needs it is rendered. For multiple components, this means multiple GPU copy and synchronization overheads, which can severely impact framerates.

Next is flexibility. The current compute pipeline can only be used for simple texture computations and post-processing, for example:

// The following pseudo-code is excerpted from the `fluid_glass` component, showing how commands are generated.

// This code is executed for each `fluid_glass` instance during the UI component's layout and measurement phase.

// 1. If a blur effect is needed, add blur commands.

if args.blur_radius > 0.0 {

// Gaussian blur requires two passes, horizontal and vertical.

let blur_pass_1 = BlurCommand::new(/* direction: horizontal */);

let blur_pass_2 = BlurCommand::new(/* direction: vertical */);

// The commands are pushed to the current UI node's metadata, waiting for the renderer to process them.

metadata.push_compute_command(blur_pass_1);

metadata.push_compute_command(blur_pass_2);

}

// 2. If contrast adjustment is needed, add the corresponding commands.

if let Some(contrast_value) = args.contrast {

// `compute_resource_manager` is used to pass intermediate results (like wgpu::Buffer) between compute shaders.

let mean_pass = MeanCommand::new(gpu, &mut compute_resource_manager);

let contrast_pass = ContrastCommand::new(contrast_value, mean_pass.result_buffer_ref());

metadata.push_compute_command(mean_pass);

metadata.push_compute_command(contrast_pass);

}

// 3. Finally, add the command to draw the glass itself.

// This draw command will depend on the results of the compute commands above (like the blurred texture).

let draw_pass = FluidGlassCommand::new(args);

metadata.push_draw_command(draw_pass);

glass with

.contrast(0.4)glass with

.blur_radius(5.0)

This means that a ComputeCommand is created as part of a specific UI component's rendering process. They are essentially post-processing filters applied to the component's background. Their input (the current scene texture) and output (the processed texture) are managed by the renderer and are tightly coupled with the final DrawCommand.

If I want to perform more general computations, such as those unrelated to rendering (like physics simulations), the current implementation can't support it because it's deeply coupled with the rendering pipeline.

Then there's the user experience, which I think is acceptable. For example, here's how contrast is adjusted for the glass component:

// ...

if let Some(contrast_value) = args.contrast {

// compute_resource_manager is used to pass wgpu::Buffer between compute pipelines.

let mean_command =

MeanCommand::new(input.gpu, &mut input.compute_resource_manager.write());

let contrast_command =

ContrastCommand::new(contrast_value, mean_command.result_buffer_ref());

if let Some(mut metadata) = input.metadatas.get_mut(&input.current_node_id) {

metadata.push_compute_command(mean_command);

metadata.push_compute_command(contrast_command);

}

}

// ...

Compared to more advanced but complex methods like a render graph, this is relatively concise and sufficient for UI scenarios. But perhaps there are better ways to organize the commands?

1 post - 1 participant

🏷️ rust_feed