Seeking Review: An Approach for Max Throughput on a CPU-Bound API (Axum + Tokio + Rayon)

⚓ Rust 📅 2025-09-09 👤 surdeus 👁️ 11Hi folks,

I’ve been experimenting with building a minimal Rust codebase that focuses on maximum throughput for a REST API when the workload is purely CPU-bound (no I/O waits).

The setup is intentionally minimal to isolate the problem. The API receives a request, runs a CPU-intensive computation (just a placeholder rule transformation), and responds with the result. Since the task takes only a few milliseconds but is compute-heavy, my goal is to make sure the server utilizes all available CPU cores effectively.

So far, I’ve explored:

- Using Tokio vs Rayon for concurrency.

- Running with multiple threads to saturate the CPU.

- Keeping the design lightweight (no external DBs, no I/O blocking).

![]() What I’d love community feedback on:

What I’d love community feedback on:

- Are there better concurrency patterns or crates I should consider for CPU-bound APIs?

- How to benchmark throughput fairly and spot bottlenecks (scheduler overhead, thread contention, etc.)?

- Any tricks for reducing per-request overhead while still keeping the code clean and idiomatic?

- Suggestions for real-world patterns: e.g. batching, work-stealing, pre-warming thread-locals, etc.

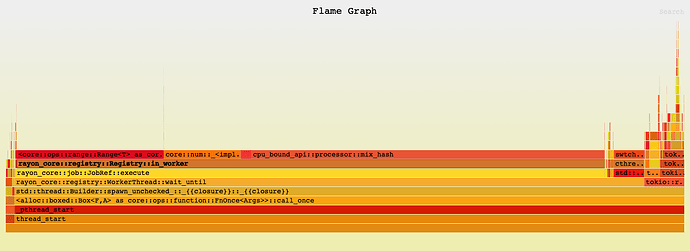

Flamegraph: (Also available in the Repo, on Apple M2 Pro Chip)

I’d really appreciate reviews, PRs, or even pointers to best practices in the ecosystem. My intent is to keep this repo as a reference for others who want to squeeze the most out of CPU-bound workloads in Rust.

Thanks in advance ![]()

1 post - 1 participant

🏷️ Rust_feed